Make the easy change hard

There’s a semi-well-known adage in software development that says when you have a hard code change, you should “first make the hard change easy, and then make the easy change.” In other words, refactor the code (or do whatever else you need to do) to simplify the change you’re trying to make before trying to make the change. This is especially good advice if you have a good automated test suite—you can first do your refactor without changing any program behaviour, and be relatively confident that you haven’t introduced any bugs or regressions in your refactor; and then you can make a smaller change that actually introduces your new feature.

Today I’m going to talk about how I followed the opposite of this advice for SimKube: I first made the easy change hard, and then I made the hard change. Because I can never do anything easy. Anyways this is going to be a fairly technical post, with a bit of a deep dive into SimKube internals and also a bunch of Rust programming techniques, so I hope you’ve had your coffee!

Who owns the owned objects?

First, a quick overview of the problem I needed to solve: in Kubernetes, resources can be owned by other resources. For example, a Deployment owns zero or more ReplicaSets, and each ReplicaSet owns zero or more Pods. These are tracked by setting an OwnerReference on the owned resource; if you wanted to know what Deployment a Pod belongs to, you would follow the chain of OwnerReferences from the Pod to the ReplicaSet to the Deployment12.

Recall that in SimKube, there is a component called the tracer, which lives in your production Kubernetes cluster and watches for changes to the resources in that cluster. You can then export those changes to a trace file for replay in your simulated environment. In the tracer, you can configure what resources to watch like this:

trackedObjects:

apps/v1.Deployment:

podSpecTemplatePaths:

- /spec/templateThe above config file tells the tracer to watch for changes to Deployments in the cluster3. In this case, when you replay a trace in your simulated environment, SimKube would create all of the Deployments specified in the trace file, and then the controller manager in your simulated cluster would create the ReplicaSets and Pods that are owned by those Deployments. This is all fine and good, but what would happen if you had a config file that looked like this?

trackedObjects:

apps/v1.Deployment:

podSpecTemplatePaths:

- /spec/template

apps/v1.ReplicaSet:

podSpecTemplatePaths:

- /spec/templateNow, the tracer would record changes to all Deployments and ReplicaSets in the cluster; when we replay this trace in the simulated environment, SimKube would create all of the Deployments, as well as all of the ReplicaSets owned by those Deployments. At the same time, the controller manager in the simulation cluster would create a second copy of the ReplicaSets owned by those deployments. Whoops! Now we have two copies of our ReplicaSets. That’s no good.

Now normally, there would be no reason to have a config file like this, you could just track the “root objects” and call it a day. But now imagine a more complicated scenario, where you have a custom resource that you want to simulate (say, an Airflow DAG Resource) that creates Deployments; now you’re in a situation where some Deployments in your trace have owners and other Deployments are the root objects.

So this is the core problem I was trying to solve; in the tracer, we should be able to tell the difference between these two types of resources using the OwnerReference field; if an object that the tracer is tracking is owned by some other object that the tracer is tracking, we shouldn’t store it in the exported trace.

Arcs, Mutexes, and Channels, oh my!

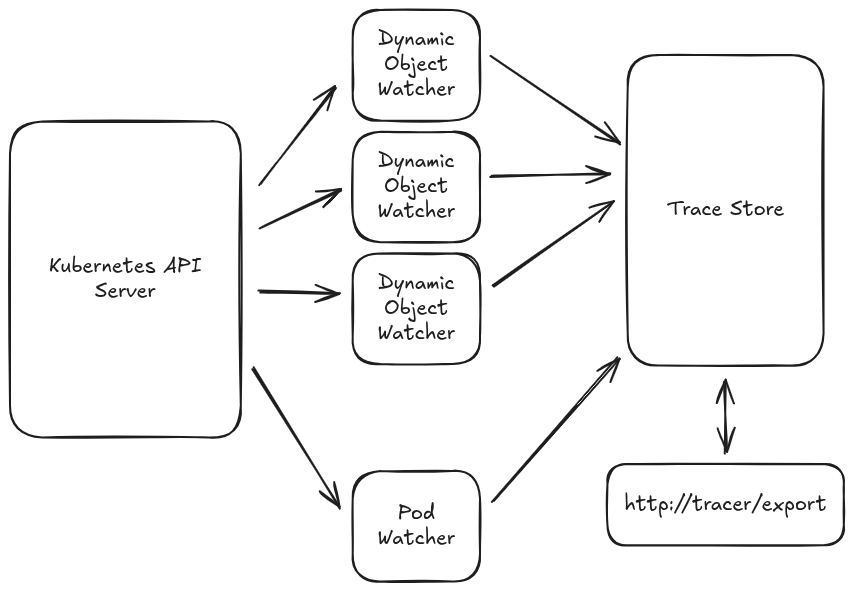

To understand how to fix this bug, we need to know a little bit about the internals of the tracer. Here’s how it looks:

Because the resources the tracer is watching are configured at runtime, we use the dynamic object API to set watches on the Kubernetes API server for these resources; there are n of these watchers, one for each configured resource type. There is also one watcher that tracks changes to Pod objects, for the purposes of recording Pod lifecycle events (creation, deletion, etc).

Each of these watchers communicates updates to the TraceStore, which is the in-memory storage backend for the tracer. Lastly, there is a Rocket server that exposes an /export endpoint to the TraceStore so that users can export a trace to long-term storage.

The way this used to work is that each of the watchers (as well as the Rocket server) held a reference to the store through an Arc<Mutex<TraceStore>>. If you’ve done any async Rust programming, you’ll recognize this pattern; it’s a way for objects on multiple threads or coroutines to safely read and write to a shared singleton. Now, in the old architecture, the Pod watcher also had a cache of OwnerReferences. This is so that we only track lifecycle data for pods that are owned by some higher-level resource in the trace.

Now we can get to the change I wanted to make: we want to look up owners for all resources, not just Pods. Easy change, right? Just stick the ownership cache in the TraceStore and call it a day! Boom, done.

I’m sure if you’ve done much async Rust programming, you see the problem with this plan, but let’s walk through it anyways. Specifically: why do we have a cache for the owner references? Looking up a recursive chain of owners to find the root owner involves repeated calls to the Kubernetes API server which a) could add additional load to the server, and b) will block other threads or coroutines while we hold an exclusive lock on the TraceStore. So instead, we look up the ownership chain once and then cache it, because ownership doesn’t change.

This was approximately fine when the Pod watcher was the only thing that needed access to the ownership cache, but now every resource update is going to potentially involve a bunch of API calls if there’s a cache miss, so we’re back to square one with regards to our mutex locks. The solution here, as I’m sure you’ve already seen, is to remove the TraceStore handles from each of the watchers, and instead communicate with the store via channels. Et voila, we’ve just made the easy change hard!

The Dude Abides Refactors

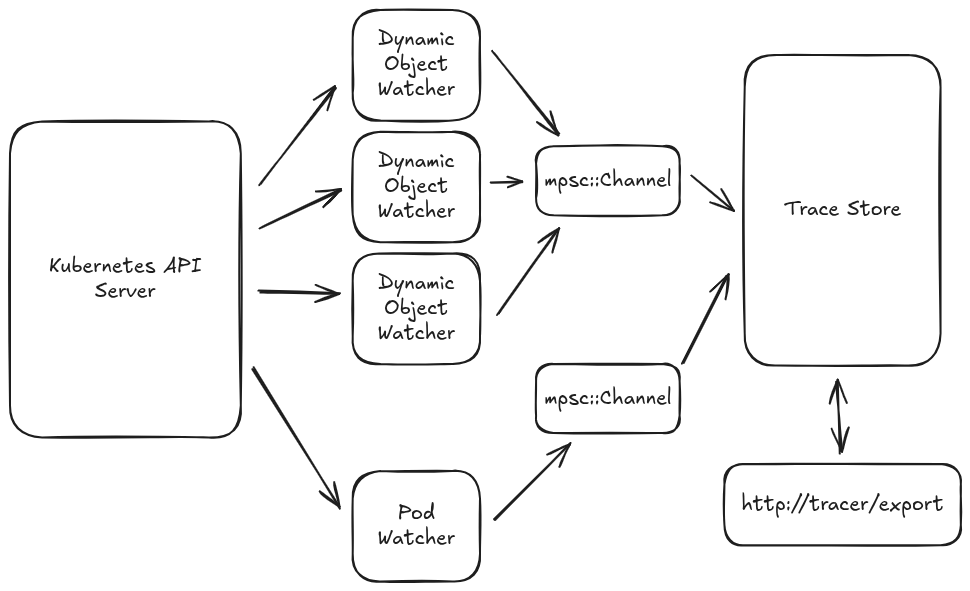

Yea I dunno where that headline came from either. Anyways let’s see what our new proposed architecture is going to look like:

Instead of having each watcher hold an Arc<Mutex<TraceStore>>, now the watchers have an mpsc::Channel::Writer4, which they use to send messages to the TraceStore. This decouples the watchers from the store: the watchers can now get updates from the Kubernetes API server as fast as it can send them, without blocking on any “expensive” operations that the TraceStore needs to do5. If you’ve done any Go programming, this is the standard pattern for goroutine communication.

This is objectively a better design, and is probably the one I should have gone with at the beginning6, but making the change now requires digging into some of the oldest code in the SimKube codebase. This is all code that I wrote back when a) I didn’t know as much about Rust as I do now, and b) I didn’t really know what I was doing with SimKube. So it’s gonna be a messy change.

The whole giant change is on GitHub (1, 2) if you just wanna look at the code, but here I’ll talk about some of the specific challenges I ran into.

Tokio versus the Standard Library

Most of the primitives that we need (channels, mutexes, etc) are implemented in the Rust standard library. They are, confusingly, also implemented in tokio, which is the most popular async runtime for Rust, and also the async runtime SimKube uses. Why are there both? Well, locks on the tokio::Mutex can be held across .await points, where as the standard library Mutex cannot. The tokio documentation states that you probably don’t want to use this because it is more expensive and you can “probably” refactor your code to not hold the Mutex across a wait.

Unfortunately, we can’t use std::mpsc::Channel because they are not Send, and thus can’t be used in an async or multi-threaded environment. We must use tokio’s versions of these channels, which then implies that we must hold a Mutex lock across the channel’s .await. The tokio documentation doesn’t really explain what the performance impacts of its fancy Mutex are, and suggests that it is “easy” to not use them, but I spent quite a while trying to figure out a suitable way to architect it without using tokio::Mutex and eventually gave up. Would be nice if there was some better documentation about the tradeoffs I’m subjecting myself to here.

Mutable recv

A consequence of the above decision7 is that std::mpsc::Channels do not take a mutable self reference when you call recv, but tokio::mpsc::Channels do. I don’t know if this is an intentional design difference between the APIs or not (I assume it must be?) but the implication is that you can’t stick the channel receivers inside the TraceStore itself without wrapping them in an Arc<Mutex<...>>; aside from being really annoying to use, it didn’t make any sense at all to me to have an Arc<Mutex<TraceStore>> (which is actually a singleton object) that holds Arc<Mutex<Receiver>>s.

I was able to refactor around this by introducing an intermediate “manager” object that facilitates the communication between the watchers and the store. However, this introduces another wrinkle: tokio channels are async, which means ideally we’d spawn them in a separate task and let them go, but tokio tasks must be 'static. But the channels that I’m constructing are dynamically created at runtime, which means they can’t be 'static8. Figuring out how to coerce the compiler into accepting all this code was a bunch of time spent that I’ll never get back: the short version is that the main “message coordinator” routine is a static function that takes ownership of the channel receivers, but getting this to work required a bunch of fighting the Rust ownership model.

Rust ownership, Kubernetes ownership, it’s all ownership

Bringing this back to the quote at the beginning, I split this change across two PRs (1, 2). The first change was attempting to make the hard change easy by implementing the channel communication with the store, and the second change was my attempt to make the easy change. It turned out, though, that “making the easy change” wasn’t actually that easy. I realized extremely late in the process that filtering out resources owned by other tracked resources at ingest time was a bad decision: there are no guarantees on ordering for the messages the TraceStore receives, so it’s possible that it receives a message about a new ReplicaSet before it receives a message about the Deployment that owns the ReplicaSet. Then when it looks up the ReplicaSet’s ownership information, it won’t find anything, and we’ll end up with a duplicate in our simulation.

Instead, what we need to do is check the ownership conditions at export time. That is, when a user requests an exported trace, we then filter out all of the stored resources that are owned by something else9. But this required me to go in and do another refactor on how we filter out objects in our exported trace. At this point, I had spent multiple weeks in the refactoring mines and was very eager to get out of them, so I’m not 100% certain that this bit of code was the best thing ever, but it all seems to work (for now). Whew!

There is a new version of SimKube (2.4.0) on GitHub, crates.io, and quay.io that contains this new code, along with a couple smaller bug-fixes and improvements.

Anyways, I hope you enjoyed coming along for the ride on my SimKube refactoring adventure, and maybe hopefully you learned something about Kubernetes and/or Rust along the way!

As always, thanks for reading; until next time,

~drmorr

Kubernetes uses this information for garbage collection, among other things. Once all an object’s owners have been deleted, the object itself can safely be deleted.

It is actually possible for an object to have more than one OwnerReference; I’ve never seen a use-case for this, but I’m sure one exists.

The podSpecTemplatePaths field is used to tell SimKube where the definition of Pod(s) owned by the resource live; that way during a simulation, SimKube can sanitize and remove irrelevant fields from the Pod specification.

mpsc stands for “multiple producer, single consumer”, which is the most common type of channel and also the easiest to implement (or so I’ve been told). Other types of channels are “single producer, multiple consumer” and “multiple producer, multiple consumer”, which involve a bunch of other tradeoffs that we don’t need to worry about.

The TraceStore still needs to be behind an Arc<Mutex<...>> for the Rocket endpoint, but it will only be locked in the event that a user calls /export which is a comparatively rare event to receiving channel messages.

It also means that the simulation components (the controller and simulation driver) don’t need to construct a whole TraceStore to run their simulations, which was a weird consequence of the old design that I never liked.

Is it really a decision if there’s no other alternative?

At least not without some really weird and gross hacks.

There’s still a potential race condition here if the user requests an export between when the ReplicaSet update and the Deployment update happen, but that’s a pretty rare/unusual circumstance, and would be something that could easily be cleaned up after the fact in the exported trace file.